Author: Kate Sunners

I was lucky enough to attend the AES 2017 International Evaluation Conference this month in Canberra, and wanted to share some of my key learnings.

Several threads ran throughout the conference, reiterated by a number of the speakers. One of the key themes which resonated with me was the importance of program and evaluation co-design with beneficiaries. Involving the marginal groups which non-profits are set up to serve in the process of evaluation builds capacity and empowers.

Opening speaker Sandra Matheson (Institute for Public Education British Columbia) advocated for a role for evaluation in ‘speaking truth to the powerless’ to address social inequality and issues. In stark contrast to ‘speaking truth to power’ (ie. using evaluations in advocacy/lobbying of Government and corporations etc) – which can often end in the truth being changed to fit the worldview of the powerful, or ignored entirely – Matheson argued that evaluation data can instead be used to educate, and equip beneficiaries to be active participants in program and evaluation design.

For example, redressing the systemic inequality experienced by Indigenous Australians requires that evaluators acknowledge and value existing indigenous knowledge, involving indigenous program beneficiary groups in setting the terms of what constitutes the key markers of success in a program. We saw some great examples of this, including from the Kanyirninpa Jukurrpa Martu Leadership Program, whose program and evaluation was constructed with and for Martu people using Martu conceptions of strength, wellness and knowledge (Read more here).

Dr Squirrel Main (The Ian Potter Foundation) reiterated this, saying that the best evaluations have three key elements; stakeholder engagement, capacity building involved in the evaluation delivery, and external benchmarking.

Another key theme was how to identify the mechanisms in a program or intervention that create the outcomes and social change.

Damien Sweeney (Clear Horizon) challenged us to ask whether we are conducting our evaluations based on a Theory of Hope, or a Theory of Change. A ‘Theory of Hope’ works on assumptions that if we provide advocacy and information we can change a person’s attitude and lead them to make the ‘right choice’. Whereas a Theory of Change first asks what the social problem is that our program aims to address? Then requires observation and inquiry into the particular behaviours that cause that social problem and why they change, finally looking at identifying barriers to change and developing strategies within our programs to overcome these barriers.

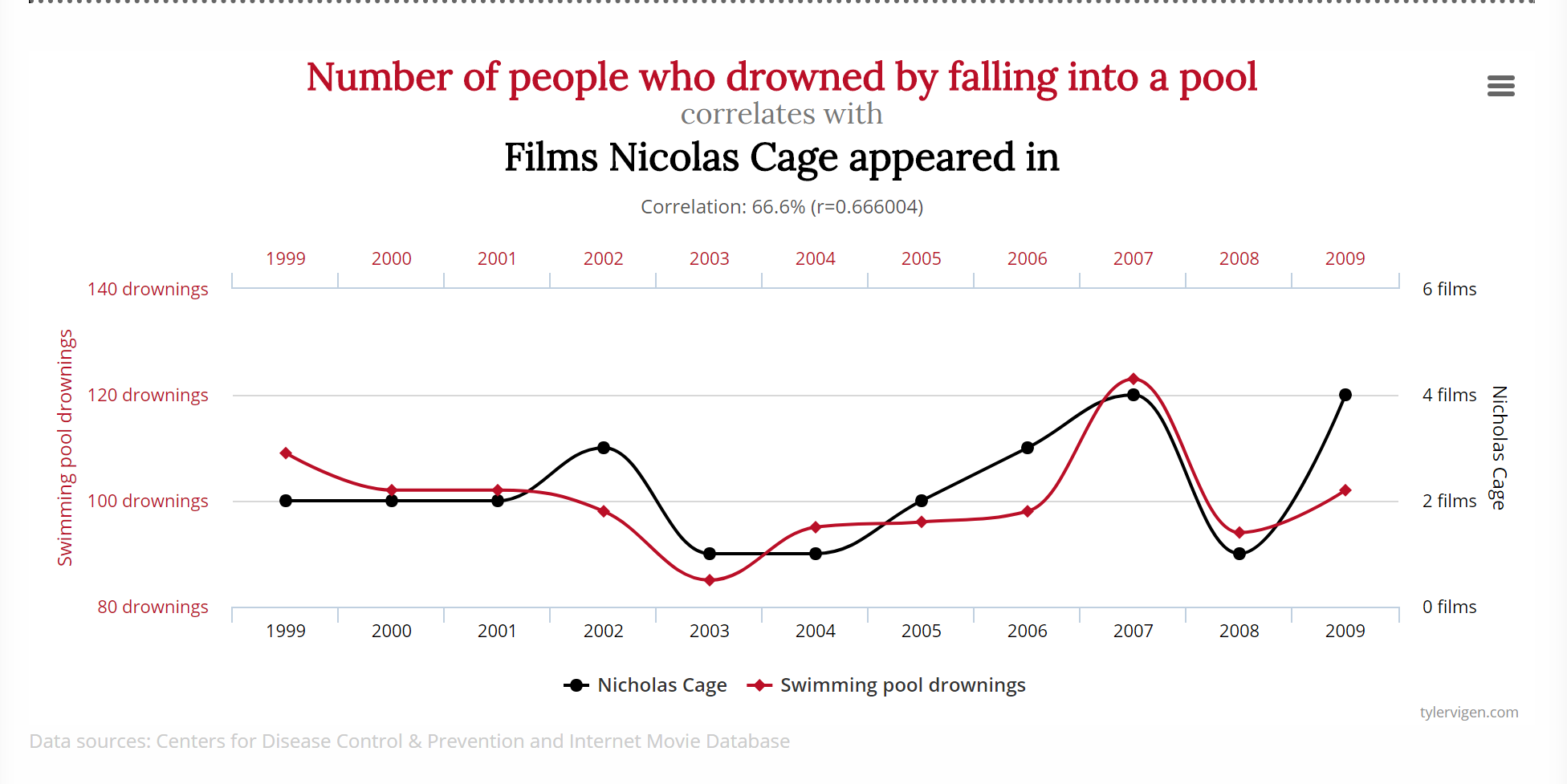

Scott Bayley (DFAT) spoke about how to look at cause and effect in evaluation, demonstrating an association between a program and an outcome by first ensuring that the time order is correct (program is delivered, then outcome follows) and ruling out alternative explanations (and pure chance and methodological errors!). Human brains are always looking for patterns, sometimes a little too hard, and so spurious correlations are born – you’ll be happy to hear there’s a fantastic website which hilariously shows the insane conclusions that can come from an incorrect formulation of cause and effect, and no examination of alternative explanations!

My favourite:

http://www.tylervigen.com/spurious-correlations

The Try Test Learn fund, of Budget 2016 fame made an appearance in two presentations. A Department of Social Services program with 16 years of data on welfare use sitting behind it, the Try Test Learn fund will first target young parents, young students and young carers. In February this year, DSS solicited ideas for helping those at risk of long term welfare to retain and flourish in long-term employment, engaging stakeholder organisations and individuals to discuss barriers and promising approaches through https://Engage.Dss.Gov.Au Following a philosophy of ‘failing fast’, projects which create excellent outcomes and impact for the target populations will be continued and scaled, while those that do not will be dropped. Funding announcements about this fund are likely to be made before the year is out, with the next round of ideas to be solicited in early 2018.

Most useful for those considering applying for Perpetual IMPACT this year was a presentation on the 4 E’s approach to Social Return on Investment from Carolyn Hooper of Allen & Clarke NZ. Rather than focusing on quantitative data which would be the usual case for a social return on investment study, Carolyn’s retrospective SROI evaluation of a project focussed instead on equity (was the money spent fairly?), economy (spending less), efficiency (spending well), and effectiveness (spending wisely). Given the focus of Perpetual IMPACT applications on efficiency and effectiveness, these measurements are particularly important for grant-seekers to understand. Beam Exchange has a great infographic of where the 4 E’s come in to the Program Logic pipeline here. And there are some more resources on the E’s on the Better Evaluation Website.

Dugan Fraser (RAITH Foundation, South Africa) summed up the major themes of the conference in his fantastic speech about how evaluation can play a part in strengthening democracy:

“Social change is created through strong organisations and good evaluation and monitoring…. Evaluation needs to go beyond evidence to promote inclusion, dialogue and education.”